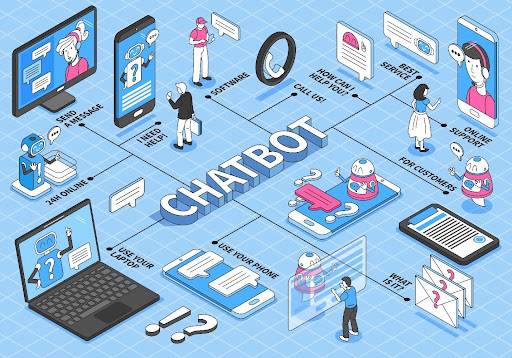

AI is changing how businesses operate and interact with their customers. The technology enables automating simple, everyday tasks, provides data insights and helps people reach better-informed decisions. Yet, as AI becomes more common in IT, worries about how it could be used are growing.

More and more companies are starting to focus on how to stop AI from coming to conclusions that could be harmful. This deals with the ethical issues arising when computers process data and make decisions.

AI is a powerful technology with a vast array of advantageous qualities. Francesca Rossi, who is in charge of AI ethics for IBM around the world, thinks that we need to build a system of trust in both the people who make the technology and the technology itself to figure out how useful it could be. “Problems of bias, data handling, system capabilities, explainability, openness on data policies, and design decisions should be handled responsibly and transparently,” the statement reads.

According to the CEO of the ethical consulting firm Ethical Intelligence and AI ethologist Olivia Gambelin, AI ethics should concentrate on comprehending how AI affects society, minimizing unintended effects, and encouraging beneficial global innovation. The author says that operationalizing AI ethics means turning abstract ideas into specific, measurable behaviors. The goal is to make sure that technology is based on human values at its core.

Risk or bias Areas for Artificial Intelligence

Kentaro Toyama, the W.K. Kellogg professor of community information at the University of Michigan School of Information, says that AI can be used in a lot of different ways, and many of them are already being used. Deep-fake photography gives visual “evidence” of flagrant falsehoods, killing decisions made by military drones using AI, and companies making money by buying and selling AI-based opinions about you. He adds that “algorithmic fairness” is another hot subject in AI ethics.

Ethical Intelligence, Olivia Gambling

Scott Zoldi, chief analytics officer at FICO, an analytics company that specializes in credit scoring services, says that the excitement about AI is at odds with the harsh reality of machine learning models that were made and put into use quickly. He says that these models may achieve a certain business goal, but only at the cost of affecting different groups. Orporate users of machine learning models “become irresponsible and crass in their decision-making, frequently not even monitoring or questioning outcomes.”

Lama Nachman, who is in charge of Intel’s intelligent systems laboratories, says that AI systems often do well when they use the data they were trained on, but many do poorly when given new data from the real world. She also says, “This creates some safety problems, like an autonomous car misidentifying strange sights.” These [AI] systems must be overseen and monitored to prevent drift over time.

Discussion on AI Ethics

An organisation should develop and use AI technologies by following rules and guidelines called an “AI ethics policy.” Nachman says this strategy is “usually based on a risk analysis approach,” in which people who come up with ideas for, build, sell, and use these systems look at the risks often associated with AI technology. She says, “AI ethical principles generally include transparency, security, privacy, safety, inclusion, responsibility, justice, and human supervision.”

A formalized AI ethical policy goes beyond being just desirable. Gambelin claims that even so, as more AI regulations are implemented and the market need for ethical technology increases, genuine survival demands are becoming more pressing. AI-driven businesses save time and money in the long run because they develop excellent and new solutions and use ethics as their primary tool for making decisions.

Perspective for AI Ethics

Businesses developing AI technology should consider ethical issues from the outset of their projects. According to Anand Rao, who oversees AI globally for the corporate consulting company PwC, “they must create the solutions from an ethical point of view.” With the launch of the product, ethics “cannot merely be a checkbox exercise,” he continues.

Just 35% of firms expect to enhance how AI systems and processes are managed as of 2021, and only roughly 20% of businesses now have an AI ethical framework. Rao anticipates better outcomes this year, though, since “responsible AI” was the CEOs’ top priority for AI in 2021.

IBM’s Rossi adds that to develop confidence in AI, business and IT executives must utilize a holistic, multidisciplinary, and multi-stakeholder approach.

The trust system should ensure people work together to find problems, talk about them, and solve them. She thinks this collaborative, multidisciplinary approach will give the best results and will most likely create a thorough and efficient environment for AI that can be trusted.

Gambelin says AI ethics is “a complicated field of passionate people and dedicated companies.”It notes that the field of AI ethics is at a crossroads. Our ability to use ethics as a tool will enable us to realize our goals and aspirations. This is a unique opportunity for humans to consider the benefits we hope to get from technology.