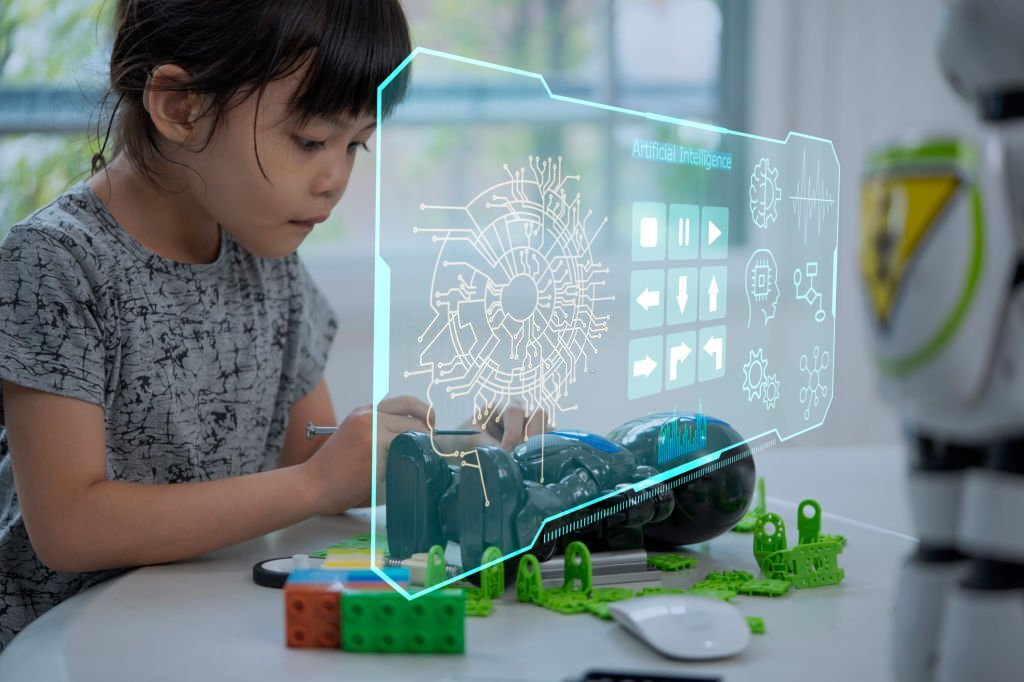

The Dark Faces of AI” special issue begins here. AI has enormous prospects to transform several businesses. This breakthrough technology allows driverless cars, face recognition payments, guided robots, and more.

AI is one of the top five developing technologies for digital-first organizations. Because AI technology is getting better and more available, 70% of businesses will build AI architectures by 2021. AI is inevitable. Several academics are now studying AI. According to research, AI has the potential to improve consumer interactions and boost corporate advantages (e.g., by increasing efficiency, increasing effectiveness, and decreasing cost).

The good things about AI get a lot of attention, but the bad things, especially in academia, don’t get much attention. Given AI’s relevance and universality, its negative effects on people, organizations, and society need more study. Considering the little study on the dark aspects of AI, we present this special issue and invite academics to examine crucial AI concerns, particularly in electronic market contexts such as electronic commerce, social media, emerging digital platforms, etc.

AI’s dangers have been questioned in tech. AI’s greatest risks are job automation, false news, and an AI-powered weapons race.

AI-Automation Job Losses

“This economy has made a lot of low-paying jobs in the service sector,” said futurist Martin Ford to Built In. “That won’t last.”

As AI robots become smarter and more agile, fewer people will do the same work. AI will generate 97 million new jobs by 2025, but if employers don’t upskill their workers, many may be left behind.

If you’re flipping burgers at McDonald’s and automation increases, would one of these new careers suit you? “Ford” “Or is it probable that the new career demands loads of schooling or training or maybe even inherent talents—really great interpersonal skills or creativity—that you may not have? Because computers are bad at those.”

AI displacement affects even graduate-level and post-college jobs.

According to technology guru Chris Messina, law and accounting are ready for AI. Messina warned some may be devastated. AI is changing medicine. Messina predicted “a huge shakeup” in law and accountancy.

“Many lawyers examine hundreds or thousands of pages of data and papers. Missing things is simple. An AI that can sift through and completely give the best contract for your result will definitely replace a lot of corporate attorneys.”

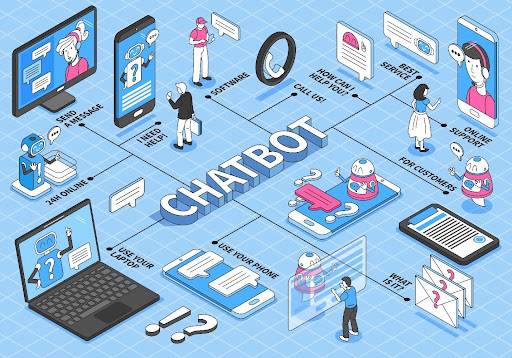

AI-based social manipulation

AI misuse includes social manipulation, according to 2018 research. In the 2022 election, Ferdinand Marcos, Jr. used a TikTok troll army to win over younger Filipino voters, proving this worry to be true.

TikTok’s AI system fills users’ feeds with similar material. This approach and the algorithm’s inability to filter hazardous and erroneous information have raised concerns about TikTok’s capacity to safeguard users.

Deepfakes have muddied politics and social media and news. The technology lets you swap figures in photos and videos. As a consequence, bad actors have another way to spread disinformation and war propaganda, making it practically hard to tell fact from fiction.

AI-based social surveillance

Ford worries about how AI will harm privacy and security in addition to its existential danger. China uses facial recognition in companies, schools, and other places. The Chinese government may collect enough data to trace a person’s actions, connections, and political opinions.

U.S. police use predictive policing algorithms to forecast crime locations. Arrest rates disproportionately affect Black neighborhoods, which affects these algorithms. Authorities subsequently over police these groups, raising issues about whether democracies can avoid turning AI into an authoritarian tool.

AI Biases

AI biases also harm. Humans produce AI, and people are prejudiced. Data and algorithmic bias may “amplify” each other.

“A.I. researchers are mostly male, who come from specific racial groups, who grew up in high socioeconomic regions, primarily individuals without disabilities,” Russakovsky added. “It’s hard to think globally since we’re a homogenous population.”

AI-Caused Socioeconomic Inequality

AI-powered hiring may jeopardize DEI projects if organizations ignore AI algorithms’ prejudices. Facial and voice analysis by AI still perpetuates racial prejudices in employment.

AI-driven job loss is another problem that shows AI’s class prejudices. Automation has reduced the wages of manual, repetitive blue-collar jobs by 70%. White-collar employees have been mostly unaffected and even paid more.

Claims that AI has overcome societal barriers or produced more employment are incomplete. Race, class, and other distinctions must be considered. Otherwise, determining how AI and automation help some and hurt others becomes harder.

AI weakening ethics and goodwill

Technologists, journalists, politicians, and religious leaders are warning about AI’s socio-economic risks. Pope Francis cautioned against AI’s propensity to “circulate tendentious thoughts and misleading facts” at a 2019 Vatican summit titled “The Common Good in the Digital Age” and warned of the dire repercussions of allowing this technology to evolve unchecked.

“If so-called technological progress were to get in the way of the common good,” he said, “this would be a sad return to a kind of barbarism where the strongest win.”

Others worry that we’ll keep pushing artificial intelligence if it makes money, no matter how many influential personalities warn us.

AI-Powered Weapons

“The essential dilemma for mankind now is whether to launch or avoid a global AI weapons race,” they said. If any major military force makes AI weapons, there will almost certainly be a global arms race. The goal of this technological path is clear: autonomous weapons will become the Kalashnikovs of the future.

Deadly autonomous weapon systems, which find and kill targets autonomously, have fulfilled this prediction. Some of the world’s most powerful countries have succumbed to fears and sparked a technological cold war as a result of the advancement of powerful and complex weaponry.

When autonomous weapons get into the wrong hands, they become even more dangerous to people. Hackers may infiltrate autonomous weapons and cause total destruction.

AI-Caused Financial Crises

AI algorithms don’t take into account settings, how markets are linked, how people trust and fear each other, or how people think or feel. These algorithms then conduct hundreds of deals in seconds to sell for modest gains. Selling thousands of transactions could scare other people into doing the same thing, which could cause the market to crash or be too volatile.

Whether deliberate or not, the 2010 flash crash and the Knight Capital flash crash show what may happen when trade-happy algorithms go nuts.

AI has value for finance. AI algorithms help investors make better market selections. Finance companies must understand their AI systems and how they make choices. Before using AI, companies should figure out if it makes investors feel more confident or less confident. This will help avoid investor worries and financial instability.

AI Risk Mitigation

AI still organizes health data and powers self-driving vehicles. Others say much more regulation is needed to maximize this promising technology.

AI Risk Mitigation

- Create global laws.

- Establish organizational

- AI standards

- Humanize tech.

The U.S. and the European Union are making laws about AI clearer, which has been a top priority for many countries. This may outlaw certain AI technologies, but civilizations may still study them. Ford thinks AI is necessary for governments to develop and compete.

“You govern AI usage, but not a fundamental technology.” Ford remarked, “That’s foolish and dangerous.” “We determine where AI is allowed and where it isn’t. ц

The key is ethical AI use. Businesses may take several AI-integration initiatives. Companies may monitor algorithms, get high-quality data, and explain AI algorithm results. Leaders could even make AI a part of the way they do business by setting standards for AI technology.

Implementing ethical AI

“AI creators must seek the insights, experiences, and concerns of people across ethnicities, genders, cultures, and socio-economic groups, as well as those from other fields, such as economics, law, medicine, philosophy, history, sociology, communications, human-computer interaction, psychology, and Science and Technology Studies (STS).”

By balancing high-tech innovation with human-centered thinking, we can make sure that technology is used in a responsible way and that AI has a bright future. Leaders should acknowledge the risks of artificial intelligence so they can use it for good.

“We can speak about all these concerns, and they’re quite real,” Ford added. “But AI is also going to be our most significant tool for tackling our toughest challenges.”